OPTIMIZING BUTTERFLY CLASSIFICATION THROUGH TRANSFER LEARNING: FINE-TUNING APPROACH WITH NASNETMOBILE AND MOBILENETV2

DOI:

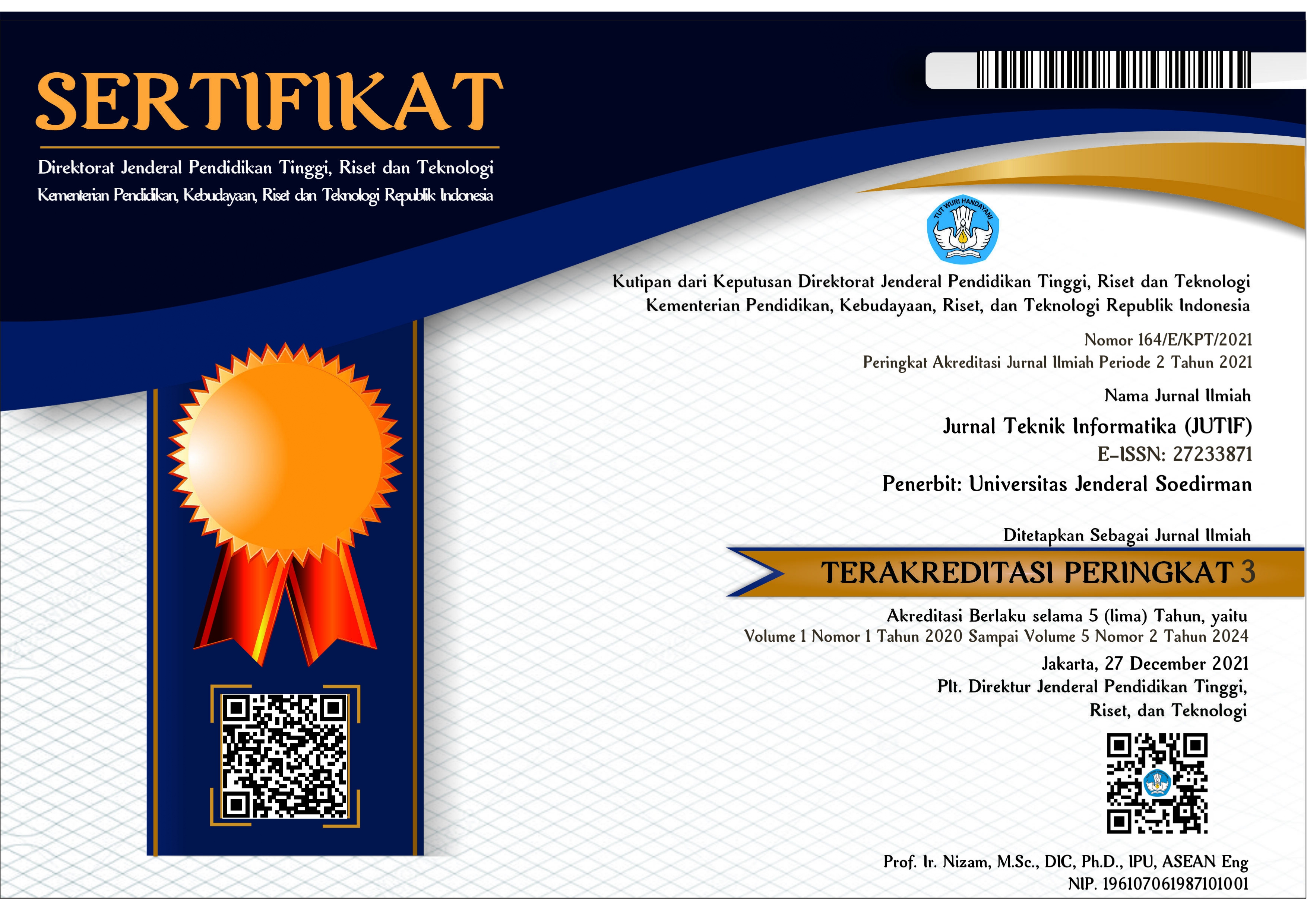

https://doi.org/10.52436/1.jutif.2024.5.3.1583Keywords:

butterfly classification, fine-tuning, MobileNetV2, NASNetMobile, transfer learningAbstract

Butterflies play a significant role in ecosystems, especially as indicators of the state of biological balance. Each butterfly species is distinctly different, although some also show differences with very subtle traits. Etymologists recognize butterfly species through manual taxonomy and image analysis, which is time-consuming and costly. Previous research has tried to use computer vision technology, but it has shortcomings because it uses a small distribution of data, resulting in a lack of programs for recognizing various other types of butterflies. Therefore, this research is made to apply computer vision technology with the application of transfer learning, which can improve pattern recognition on image data without the need to start the training process from scratch. Transfer learning has a main method, which is fine-tuning. Fine-tuning is the process of matching parameter values that match the architecture and freezing certain layers of the architecture. The use of this fine-tuning process causes a significant increase in accuracy. The difference in accuracy results can be seen before and after using the fine-tuning process. Thus, this research focuses on using two Convolutional Neural Network architectures, namely MobileNetV2 and NASNetMobile. Both architectures have satisfactory accuracy in classifying 75 butterfly species by applying the transfer learning method. The results achieved on both architectures using fine-tuning can produce an accuracy of 86% for MobileNetV2, while NASNetMobile has a slight difference in accuracy of 85%.

Downloads

References

N. N. K. Arzar, N. Sabri, N. F. M. Johari, A. A. Shari, M. R. M. Noordin, and S. Ibrahim, “IEEE International Conference on Automatic Control and Intelligent Systems,” in IEEE Control Systems Society. Chapter MalaysiaInstitute of Electrical and Electronics Engineers, 2019.

E. Hartati, K. Kunci, and K. Kupu, “Klasifikasi Spesies Kupu Kupu Menggunakan Metode Convolutional Neural Network,” in MDP Student Conference (MSC), 2022, pp. 569–577.

L. Zhu and P. Spachos, “Towards Image Classification with Machine Learning Methodologies for Smartphones,” Mach Learn Knowl Extr, vol. 1, no. 4, pp. 1039–1057, Dec. 2019, doi: 10.3390/make1040059.

T. Y. Chen, “MonarchNet: Differentiating Monarch Butterflies from Butterflies Species with Similar Phenotypes,” Jan. 2022, doi: 10.1096/fasebj.2021.35.S1.05504.

B. A. Bakri, Z. Ahmad, and S. M. Hatim, “Butterfly family detection and identification using convolutional neural network for lepidopterology,” International Journal of Recent Technology and Engineering, vol. 8, no. 2 Special Issue 11, pp. 635–640, Sep. 2019, doi: 10.35940/ijrte.B1099.0982S1119.

H. He, “The Comparison and Analysis of Classic Convolutional Neural Network in the Field of Computer Vision,” in IOP Conference Series: Materials Science and Engineering, Institute of Physics Publishing, Mar. 2020. doi: 10.1088/1757-899X/740/1/012153.

A. K. Sharma et al., “Dermatologist-Level Classification of Skin Cancer Using Cascaded Ensembling of Convolutional Neural Network and Handcrafted Features Based Deep Neural Network,” IEEE Access, vol. 10, pp. 17920–17932, 2022, doi: 10.1109/ACCESS.2022.3149824.

P. Langgeng, W. E. Putra, M. Naufal, and E. Y. Hidayat, “A Comparative Study of MobileNet Architecture Optimizer for Crowd Prediction,” Semarang 123 Jl. Imam Bonjol No, vol. 8, no. 3, p. 50131, 2023.

T. Ridnik, E. Ben-Baruch, A. Noy, and L. Zelnik-Manor, “ImageNet-21K Pretraining for the Masses,” Apr. 2021, [Online]. Available: http://arxiv.org/abs/2104.10972

Livinus Ifunanya Umeaduma, “Survey of image classification models for transfer learning,” World Journal of Advanced Research and Reviews, vol. 21, no. 1, pp. 373–383, Jan. 2024, doi: 10.30574/wjarr.2024.21.1.0006.

A. S. C. K. Ravi Joshi Annapurna Maritammanavar, “Transfer Learning in Computer Vision: Technique and applications,” Tuijin Jishu/Journal of Propulsion Technology, 2023, [Online]. Available: https://api.semanticscholar.org/CorpusID:265648825

A. S. Almryad and H. Kutucu, “Automatic identification for field butterflies by convolutional neural networks,” Engineering Science and Technology, an International Journal, vol. 23, no. 1, pp. 189–195, Feb. 2020, doi: 10.1016/j.jestch.2020.01.006.

T. S. Alves et al., “Automatic detection and classification of honey bee comb cells using deep learning,” Comput Electron Agric, vol. 170, Mar. 2020, doi: 10.1016/j.compag.2020.105244.

P. Anantha Prabha, G. Suchitra, and R. Saravanan, “Cephalopods Classification Using Fine Tuned Lightweight Transfer Learning Models,” Intelligent Automation and Soft Computing, vol. 35, no. 3, pp. 3065–3079, 2023, doi: 10.32604/iasc.2023.030017.

K. Apivanichkul, P. Phasukkit, and P. Dankulchai, “The Effect of Preprocessing on U-Net for Bladder Segmentation in CT Images,” in International STEM Education Conference (iSTEM-Ed), 2023, pp. 1–5. doi: 10.1109/iSTEM-Ed59413.2023.10305805.

DIPIE, “https://www.kaggle.com/datasets/phucthaiv02/butterfly-image-classification,” KAGGLE.

H. K. Dishar and L. A. Muhammed, “Detection Brain Tumor Disease Using a Combination of Xception and NASNetMobile,” International Journal of Advances in Soft Computing and its Applications, vol. 15, no. 2, pp. 325–336, 2023, doi: 10.15849/IJASCA.230720.22.

Y. Ding, “The Impact of Learning Rate Decay and Periodical Learning Rate Restart on Artificial Neural Network,” in Proceedings of the 2021 2nd International Conference on Artificial Intelligence in Electronics Engineering, in AIEE ’21. New York, NY, USA: Association for Computing Machinery, 2021, pp. 6–14. doi: 10.1145/3460268.3460270.

H. Iiduka, “The Number of Steps Needed for Nonconvex Optimization of a Deep Learning Optimizer is a Rational Function of Batch Size,” Aug. 2021, [Online]. Available: http://arxiv.org/abs/2108.11713

Z. Hu, J. Xiao, N. Sun, and G. Tan, “Fast and accurate variable batch size convolution neural network training on large scale distributed systems,” Concurr Comput, vol. 34, no. 21, p. e7119, 2022, doi: https://doi.org/10.1002/cpe.7119.

R. Anditto and R. Roestam, “SECURITY MONITORING USING IMPROVED MOBILENET V2 WITH FINE-TUNING TO PREVENT THEFT IN RESIDENTIAL AREAS DURING THE COVID-19 PANDEMIC,” Science and Information Technology, vol. Vol. 5, no. No 1, pp. 87–94, 2022, [Online]. Available: https://doi.org/10.31598

Q. Li, J. Zhang, and Z. Chen, “Detection on difficult small objects using layer-wise training strategy,” in Proceedings of 2020 IEEE 3rd International Conference of Safe Production and Informatization, IICSPI 2020, Institute of Electrical and Electronics Engineers Inc., Nov. 2020, pp. 282–286. doi: 10.1109/IICSPI51290.2020.9332370.

T. Hong Chun et al., “Efficacy of the Image Augmentation Method using CNN Transfer Learning in Identification of Timber Defect,” 2022. [Online]. Available: www.ijacsa.thesai.org.